(Open Compute) the Future of AI: 1MW Racks and the Open-72 Pod

I’ve always admired the Open Compute Project (OCP) community and its push to standardise data center technology. From servers to networking, OCP has shaped the way hyperscalers build at scale.

Now, with AI pushing compute requirements through the roof, OCP is stepping up again, this time with a vision for 1MW racks and an open, modular AI pod design that could redefine infrastructure for the AI era.

Just happened, today I came accross with this white paper released in January 2025 where explains, far from the topics the AI datacenter explosion.

The need⌗

Compute demands for AI are growing 4× annually, according OCP, driven by generative models that require tens of 10000s of GPUs.

Traditional racks delivering 10–20kW are no longer enough. Hyperscale clusters now consume tens of megawatts, pushing power and thermal limits of existing infrastructure.

Obviously, not many facilities are prepared to absorb this impact in terms of resources.

⚡ Enter the 1MW Rack⌗

The shift to 1MW AI racks marks a evolution in data center infrastructure, driven by the exponential compute demands of large-scale AI models. Traditional 10–20kW racks simply can’t deliver the power density required for today’s training workloads, which are increasingly concentrated into high-performance accelerator pods.

To overcome this, the OCP community is looking a series of foundational design changes, like transitioning from 48V to ±400–800VDC power delivery minimizes electrical losses and enables thinner, more efficient busbars.

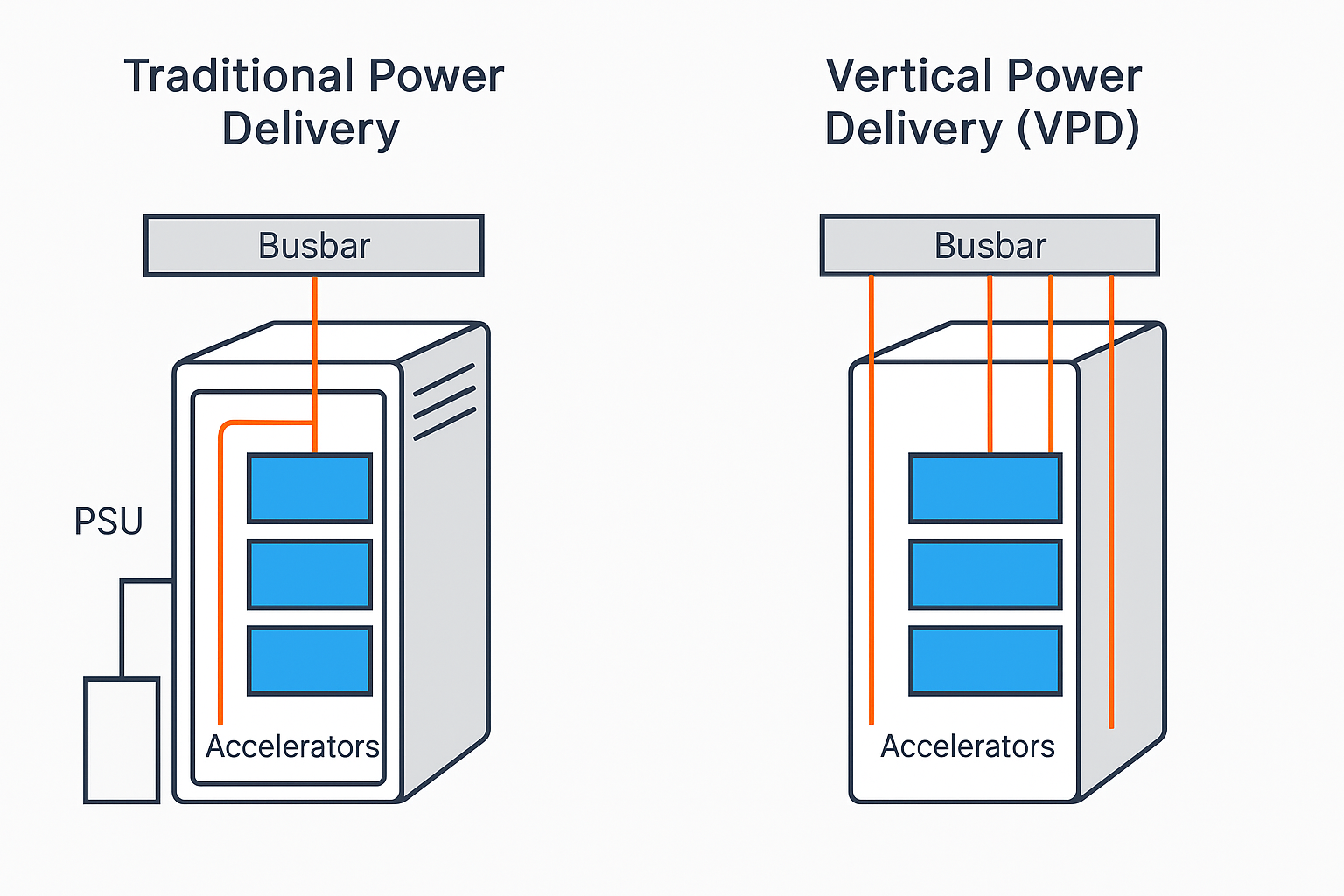

I learnt about the concept of vertical power (VPD), which is a new way of bringing electricity to the chips inside a server. In traditional designs, power travels horizontally across circuit boards before reaching the processors. As chips get more powerful and hungry for electricity, these long paths waste energy as heat (called IR loss) and make it harder to keep everything cool.

With VPD, electricity is delivered straight down from above or below, like plugging a lamp directly into the ceiling instead of running a cable across the room. This shorter, more direct path means less wasted energy, cooler chips, and more space inside the rack for additional compute modules. This may sound at mundanal at first, but can reduce power losses by 70% compared to traditional lateral power distribution!!

When combined with moving power conversion and BBUs into separate sidecar racks, it frees up even more room for GPUs and accelerators in the main rack—helping achieve the ultra-dense, high efficient design needed for next-generation AI systems.

Additionally reduces IR losses at the chip level and improves thermal performance!

These racks also depend on advanced cooling technologies, as air cooling can no longer manage the heat loads—requiring direct-to-chip liquid loops, immersion, or even two-phase cooling to sustain operability. Collectively, these innovations make it possible for a single rack to support workloads that previously spanned an entire row, setting the stage for massively dense and energy-efficient AI clusters.

Open-72: The Open AI Pod⌗

To meet the demands of large-scale AI training, the Open Compute Project has introduced the concept of the Open-72 Pod—a (A concept I learnt after reading this paper) fully open and modular approach to high-density compute.

It is designed to house 72 accelerators per pod, it delivers parallel processing power while leveraging high-bandwidth fabrics like NVLink, UALink, and Ultra Ethernet for seamless interconnectivity.

Unlike proprietary solutions such as NVIDIA’s NVL72, the Open-72 Pod uses open specifications that allow multiple vendors to contribute compatible trays for compute, power, cooling, and management.

This composable architecture not only enforces healthy competition in the supply chain but also ensures interoperability and easier scaling for data center operators.

Standardizing these building blocks, OCP makes possible to assemble hyperscale AI clusters without being locked into a single vendor ecosystem—paving the way for a truly collaborative, future-proof AI infrastructure, which is aligned with OCP mission of course.

Why Open Standards ?⌗

Unfortunately, AI infrastructure is dominated by proprietary, closed systems from NVIDIA’s DGX servers to Google’s TPU pods and custom hyperscaler only designs. While these players have powered many early breakthroughs, they also create vendor lock-in, limiting flexibility and making the global supply chain more fragile.

As someone who has always been a big fan of open source, I find OCP’s vision particularly exciting because it brings that same spirit of openness and collaboration to hardware. Instead of relying on monolithic, proprietary solutions, OCP defines open, standardized building blocks for compute, power delivery, cooling, and management. This approach fosters true interoperability between vendors, reduces costs through shared standards, and accelerates innovation through community-driven development. To me, this feels like a turning point, an opportunity to build AI infrastructure that’s not only more resilient and adaptable, but also fundamentally more inclusive and open, just like the best of open source software.

🌱 How green is this ?⌗

The shift to 1MW open racks isn’t just about packing more compute into a smaller footprint—it.

Also opens the door to meaningful sustainability gains. With this architecture, data centers can capture and repurpose waste heat instead of venting it into the atmosphere, integrate battery energy storage systems (BESS) at the facility level to smooth out energy use, and embrace modular upgrades that avoid full hardware replacements and reduce e-waste.

I am excited seeing progress towards scaling AI infratructure, but I am also looking for green energy and sustainable tech, I find this evolution especially important.

AI is rapidly becoming the backbone of our digital future, and it’s critical that the infrastructure behind it grows responsibly.

Open standards like these make it easier for the industry to adopt renewable energy sources, improve energy efficiency, and meet global carbon reduction goals—not as an afterthought, but as a core design principle.

💡 Final Thoughts⌗

Feels like, as OCP standardised hyperscale servers a decade ago, the Open Systems for AI initiative is laying the groundwork for the next generation of data centers.

With 1MW racks and an open pod architecture, there is a real opportunity to reshape the future of AI infrastructure into something far more aligned with the values many of us in tech care about.

We’re talking about systems that are scalable, capable of keeping up with the explosive growth in compute demand; sustainable, by design—reducing power losses and repurposing waste heat; and most importantly, free from vendor lock-in, encouraging a truly competitive and diverse ecosystem of hardware innovators.

Personally, I see this as more than just an evolution in how data centers are built. It’s a chance to bring the principles I’ve always believed in—openness, interoperability, and sustainability—to the foundation of AI itself.

Just as open source transformed software by making it more collaborative and accessible, I believe open infrastructure can do the same for AI. And that’s a future worth building.

Hope you enjoyed this whitepaper as a I did!⌗

Have thoughts on open AI infrastructure? Join the OCP community discussions and help shape the future of data centers.